December 31, 2013 Facebook wants to know if you trust it. But it's keeping all the answers to itself.

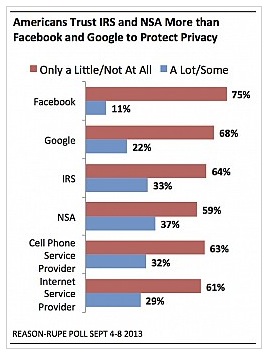

By Brian Fung I recently got a prompt from Facebook to take a short survey on my user experience. You might've gotten one, too. It's pretty quick and painless -- the company gives you a multiple-choice form and starts by asking how happy you are with Facebook, whether the service is easy to use, and if it's reliable or not. But then Facebook asks you something really interesting. It asks whether you trust it. What that means isn't exactly clear. Trust Facebook to do what? To be a reliable catalog of your life? To keep your data out of the hands of the NSA? To show you only the Farmville updates you care about? The ambiguity isn't much of a surprise. It's a perfect reflection of the broader Silicon Valley philosophy about data: Explicit definitions are unnecessary if you have enough respondents to form an average understanding of what "trustworthy" implies. Let people answer according to their own interpretation of the word, and the aggregate results from the whole userbase should be sufficiently revealing. Rather more enlightening is the fact that Facebook cared to ask at all. Someone there is at least curious about the answers, if not actively worried about it. And there's good reason for that: In polling by third parties, Facebook hasn't fared too well on the question of trust. Nearly 60 percent of respondents to an AP/CNBC survey last year [1] said they had "little or no faith" in Facebook's commitment to privacy. Active users of Facebook were in fact more likely to say so than non-users of the service. Then, this year, Reason ran a poll in the wake of the Edward Snowden revelations about U.S. surveillance. The results [2] were remarkable. Seventy-five percent of respondents said they trusted Facebook only a little bit or not at all to protect privacy. By contrast, the share of people saying the same about the NSA came to just 59 percent. More people, in other words, were distrustful of Facebook than the NSA -- even in the weeks following massive unauthorized disclosures about the agency's controversial snooping activity. If Facebook performs so dismally on third-party surveys, what does its own userbase tell the company? When I asked Facebook what insights it had learned from the questionnaire, a spokesman told me the company does not share the data it collects from the survey -- even though it asks regularly for data from us. "We are constantly working to improve our service," the spokesperson said, "and getting regular feedback from the people who use it is an invaluable part of the process." Facebook might actually gain by releasing its results, whatever they happen to look like. Suppose the numbers turn out looking really bad. With solid majorities of Americans already skeptical of Facebook's privacy practices, it's not like the company's score on that count could get much worse. And clearly people continue to use Facebook anyway, as the AP/CNBC results show. [1] http://www.nbcnews.com/technology/we-may-not-trust-facebook-we-dont-quit-it-either-775670

[2] http://reason.com/poll/2013/09/27/poll-on-privacy-irs-and-nsa-deemed-more